start voice interaction

This demo is based on the combination of offline command word recognition of HKUST News, offline speech synthesis function and VAD algorithm to achieve voice control.

The offline command word is responsible for detecting microphone input data

Offline Speech Synthesis is responsible for synthesizing feedback output data

VAD is responsible for getting microphone input data and saving it

1 Implementation process

The VAD algorithm can judge the surrounding energy in real time, and judge whether it is recognized as a voice according to the energy threshold. If it is recognized as a voice, it will be saved and through offline keyword detection to determine whether there is a wake-up keyword in the voice. If there is a wake-up keyword, it will be fed back to the wake-up state machine, otherwise the robot will remain in a dormant state.

When the wake-up state machine is in the wake-up state, if the command issued at this time is a valid command, the robot will execute the corresponding command, and let the wake-up state machine return to sleep mode after completion.

2 How to use

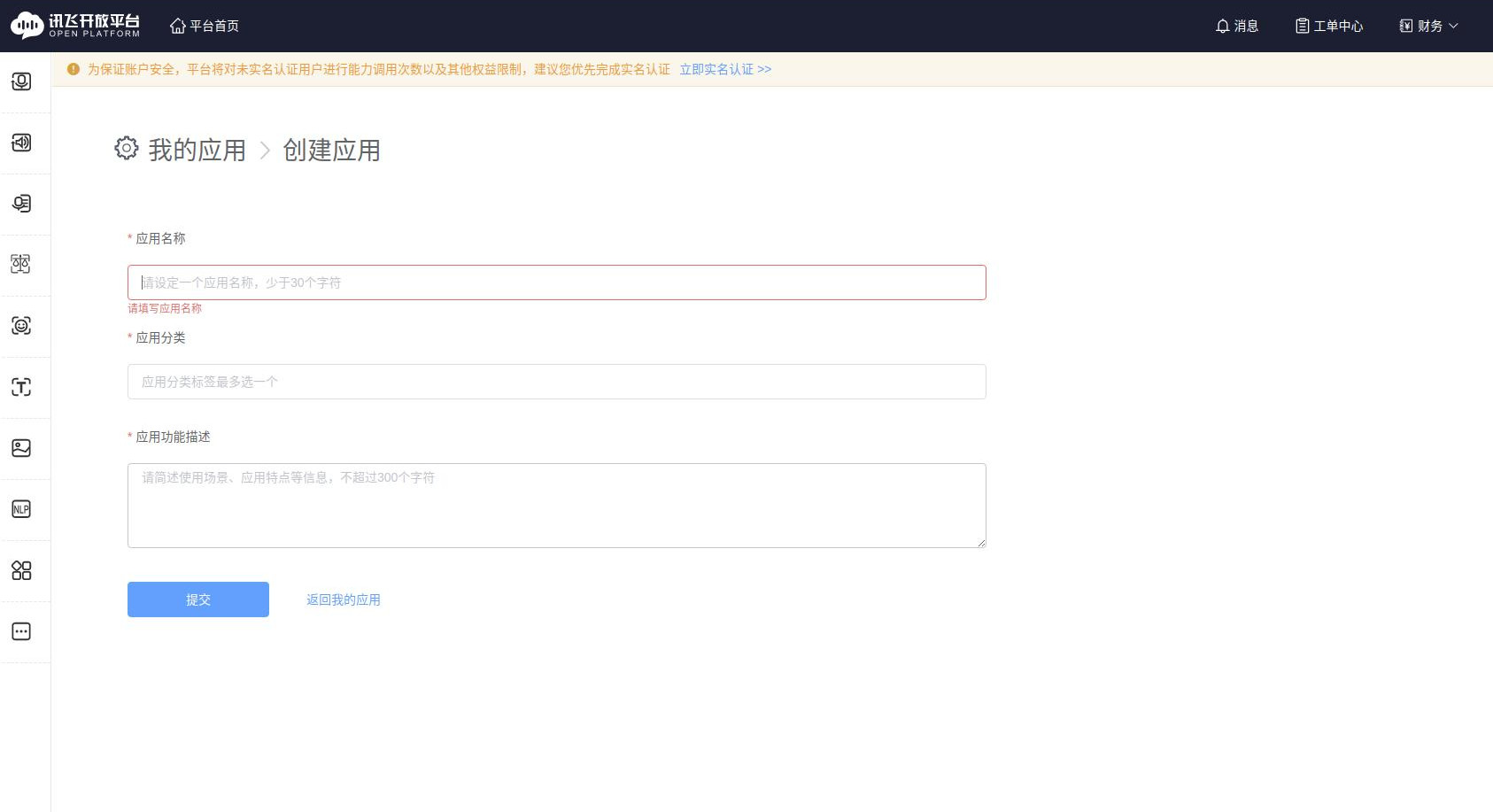

- Go to HKUST XFLYTEK official website, scan the QR code to quickly log in, and register an account

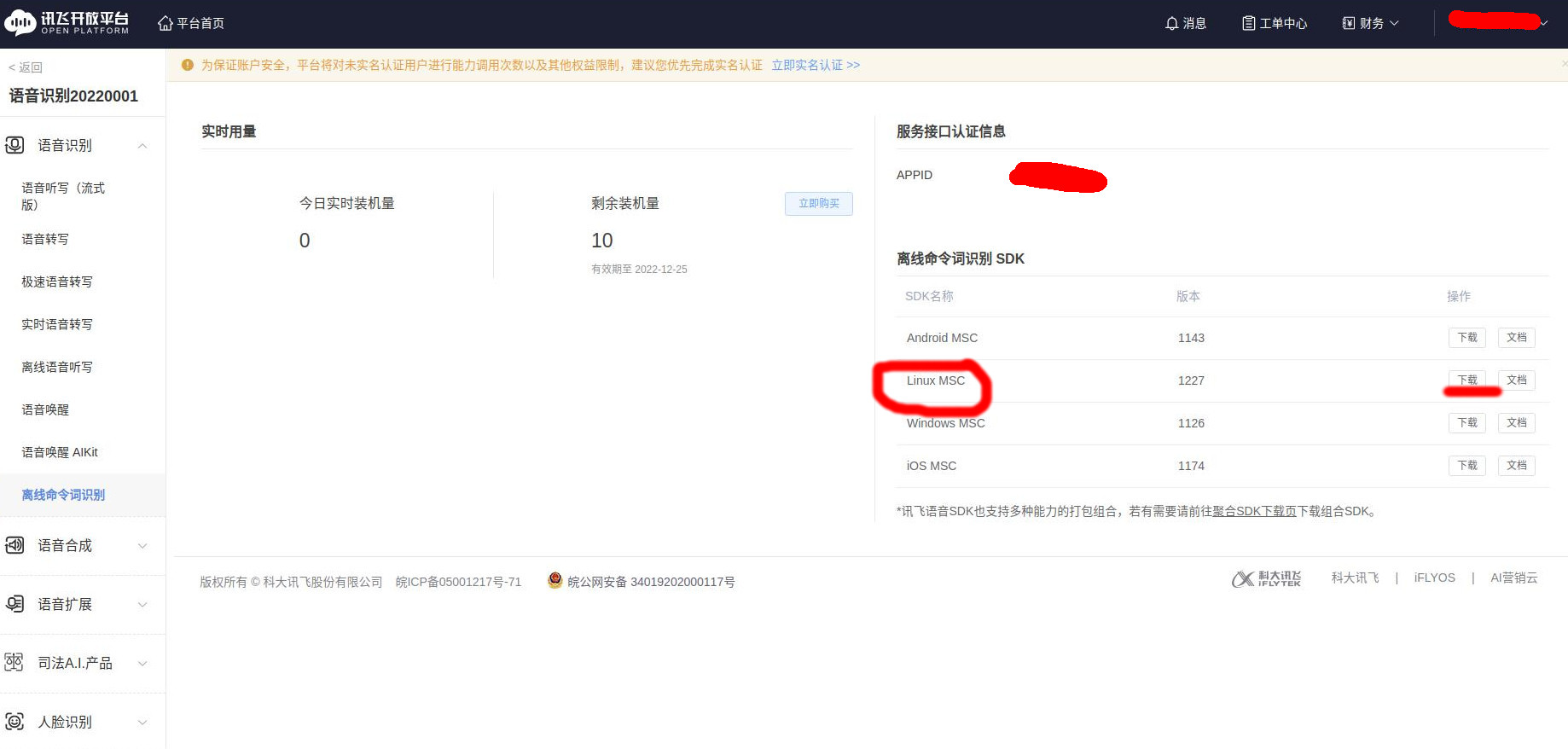

- First create an application case of your own. After creating the application, you will get your own appid, which will be used later. Users need to find it in my application.

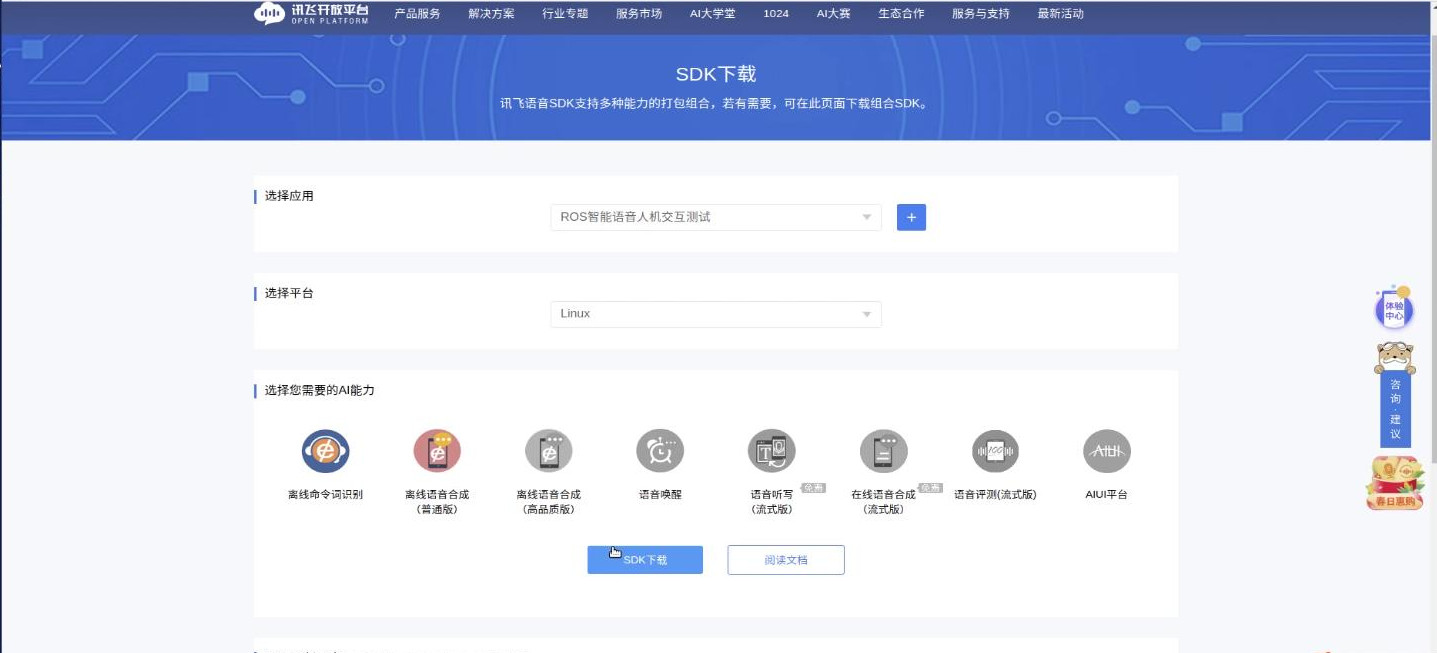

- Select SDK download in the navigation bar, and download the SDKs of

Offline Speech SynthesisandOffline Command Word Recognition. Here you can package and download the two SDKs together.

- Unzip the downloaded compressed package, open the compressed package, and copy the

handsfree_speech/cfg/msc/res/asr/talking.bnffile tobin/msc/res/asr in the folder just decompressedpath, and then overwrite the two foldersbin/msc/res/ttsandbin/msc/res/asrof the SDK under the file path of the same name in the cfg file in the speech demo we provided, namelyhandsfree_speech /cfg/directory (there are two common.jet here, one is for offline speech synthesis and the other is for offline command word recognition, which must correspond one by one)

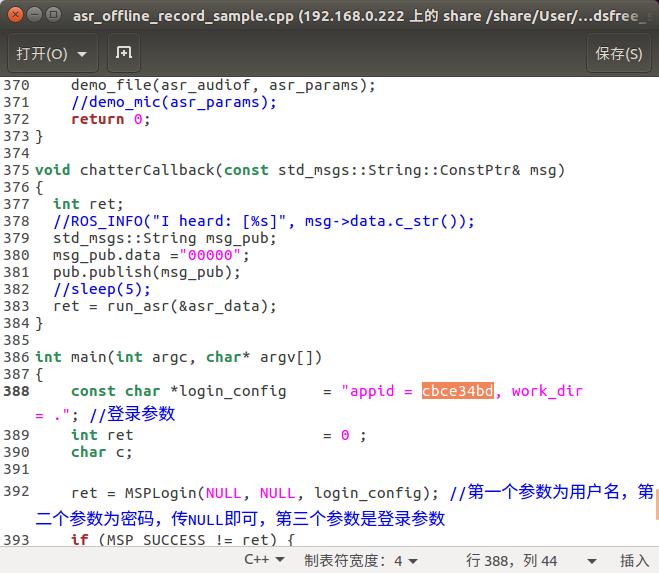

- Modify the appid login parameter in the code

handsfree_speech/src/Voice_feedback/tts_offline_sample.cppandhandsfree_speech/src/Voice_recognition/asr_offline_record_sample.cppfiles. Because each user's SDK is bound to the corresponding appid, if the appid does not match, there will be a corresponding error message feedback.

Return to

handsfree_ros_ws/directory, open a new terminal to compilecatkin_makeClose all terminals, open a new terminal, and run the command (at least the voice interaction node and the VAD voice activity detection node need to be run. When other functions need to be used, what functions need to be turned on? Currently, multiple functions If it is called at the same time, there is still a problem, the linkage has not been fully opened)

//Voice interaction node

ros launch hands free_speech offline_interactive_ros.launch

//VAD voice activity detection node

rosrun handsfree_speech vad_record.py

//Voice control driver node (optional, can be used for basic operations such as `前进` and `后退`)

rosrun handsfree_speech cn_voice_cmd_vel.py

//Voice navigation node (optional, only needs to be started when performing voice navigation)

rosrun handsfree_speech set_goal.py

//Voice patrol node (optional, only needs to be started when performing voice patrol)

rosrun handsfree_speech multi_point_patrol.py

Here we can use the simulation environment to see the effect first, and we have provided the corresponding simulation environment tools in handsfree. You can run the above three instructions of Voice Interaction Node, VAD Voice Activity Detection Node and Voice Control Driver Node, and run the following instructions (you can choose one of the two) to test the robot voice in the simulation environment.

(Wake up the program through the wake-up word Xiaodao, and use basic voice commands such as 前进,后退,左转,右转 and other basic voice commands to control the movement of the robot in the simulation environment. For detailed instructions, please refer to the follow-up tutorial)

roslaunch handsfree_2dnav navigation.launch

Both of these two commands can be used to start the simulation environment. If the space is not enough, we can execute any one of them to perform the simulation test. Through the simulation environment, we can test the execution effect of the voice interaction and debug the robot driving function.

If you want to control the robot with voice in the actual environment, you also need to run the following command to start the actual drive control node.

//drive control node

roslaunch handsfree_hw handsfree_hw.launch

We recommend that you study the subsequent detailed tutorials first, understand the function of the code by modifying some code parameters, and conduct simulation tests, and finally control the robot in the actual environment.

The following is an error code information query of HKUST Xunfei. If you report an error related error code when calling HKUST Xunfei, you can query it through this link. HKUST XFLYTEK Error Code Information Query