RGBD camera experiment

HandsFree supports a variety of depth cameras, including Xtion Pro, Obi Zhongguang, Kinect, etc. This section will explain how to use Astra dabai to conduct several visual-related small experiments.

1 Test camera

Refer to Hardware Driver Test tutorial, first test whether the depth camera works normally:

There are 3 ways to view pictures. The first method is to open rviz visualization with a terminal

roslaunch handsfree_camera view_astra_dabai.launch

or

roslaunch handsfree_camera view_camera.launch

This command opens the camera driver node and the rviz visualization interface.

The command to open the camera driver node alone is roslaunch handsfree_camera camera.launch

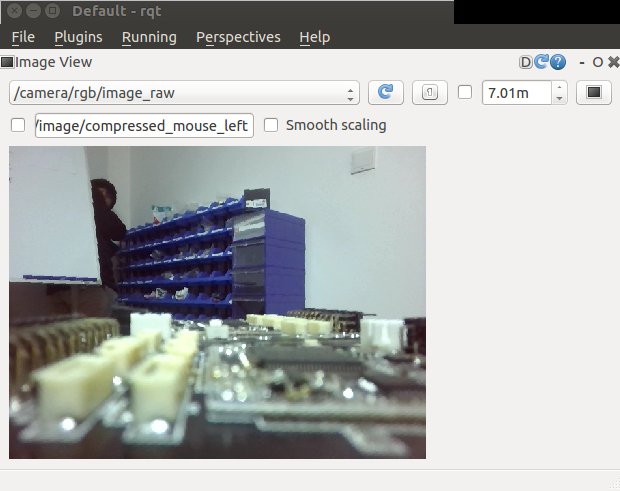

The second way to view the image, you can view the image in rqt, run 'rqt' in the terminal (you need to open the camera driver node first):

If there is no image_view after opening rqt, you can add it manually: on the window, Plugins -> Visualization -> Image View can be added successfully. If you want to switch between different topics to view, you can switch between different images in the /camera/rgb/image/raw window.

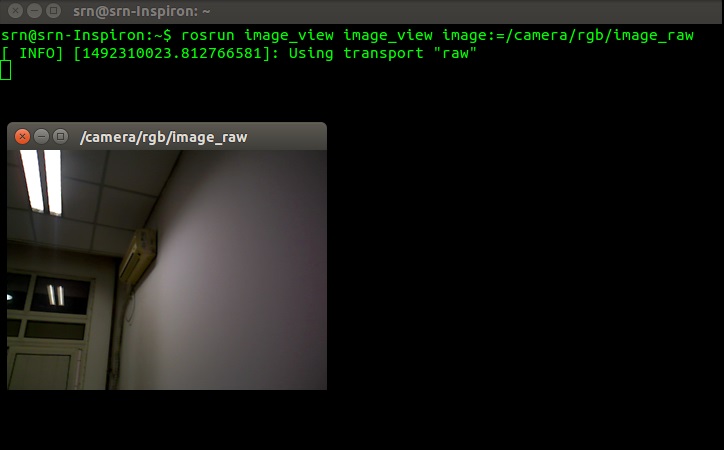

The third way to view pictures is to open image_viewer in the terminal (you need to open the camera driver node first):

rosrun image_view image_view image:=/camera/rgb/image_raw

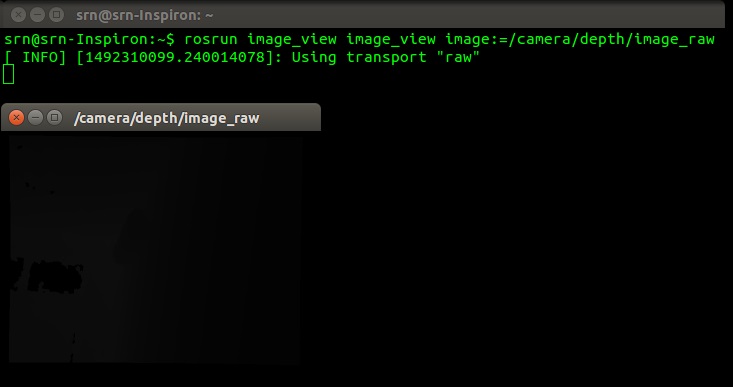

rosrun image_view image_view image:=/camera/depth/image_raw

If both the RGB image and the depth image can be displayed normally, the depth camera is working normally, and the relevant small visual experiment will be carried out next.

2 RGBD mapping experiment

In the Radar Mapping chapter, we use lidar to scan the surrounding environment and provide distance data to generate a map. In addition to using lidar for mapping, we can also use depth cameras to simulate lidar for mapping.

- The process of using a depth camera to build a map is basically the same as using a lidar to build a map, except that the open lidar node is replaced with the virtual laser node that opens the depth camera.

- The virtual laser scanning angle of the depth camera is relatively narrow. The depth camera is generally around 57°, while the scanning range of the general lidar can reach 240° or even larger.

Open all terminals before closing, open a new terminal, and run the following commands:

Run the robot rgb mapping node:

roslaunch handsfree_vision fake_laser.launchThe terminal will open the chassis control driver, camera, mapping node and keyboard control node

Use the keyboard to control the remote control robot to build the map.

Save the map

First switch to the folder where you want to save the map (it is recommended to save it in handsfree_2dnslam/map)

roscd handsfree_2dslam /map/Then run the node that holds the map:

rosrun map_server map_saver -f my_rgbd_map-f followed by the name of the map save.