ORB_SLAM v2

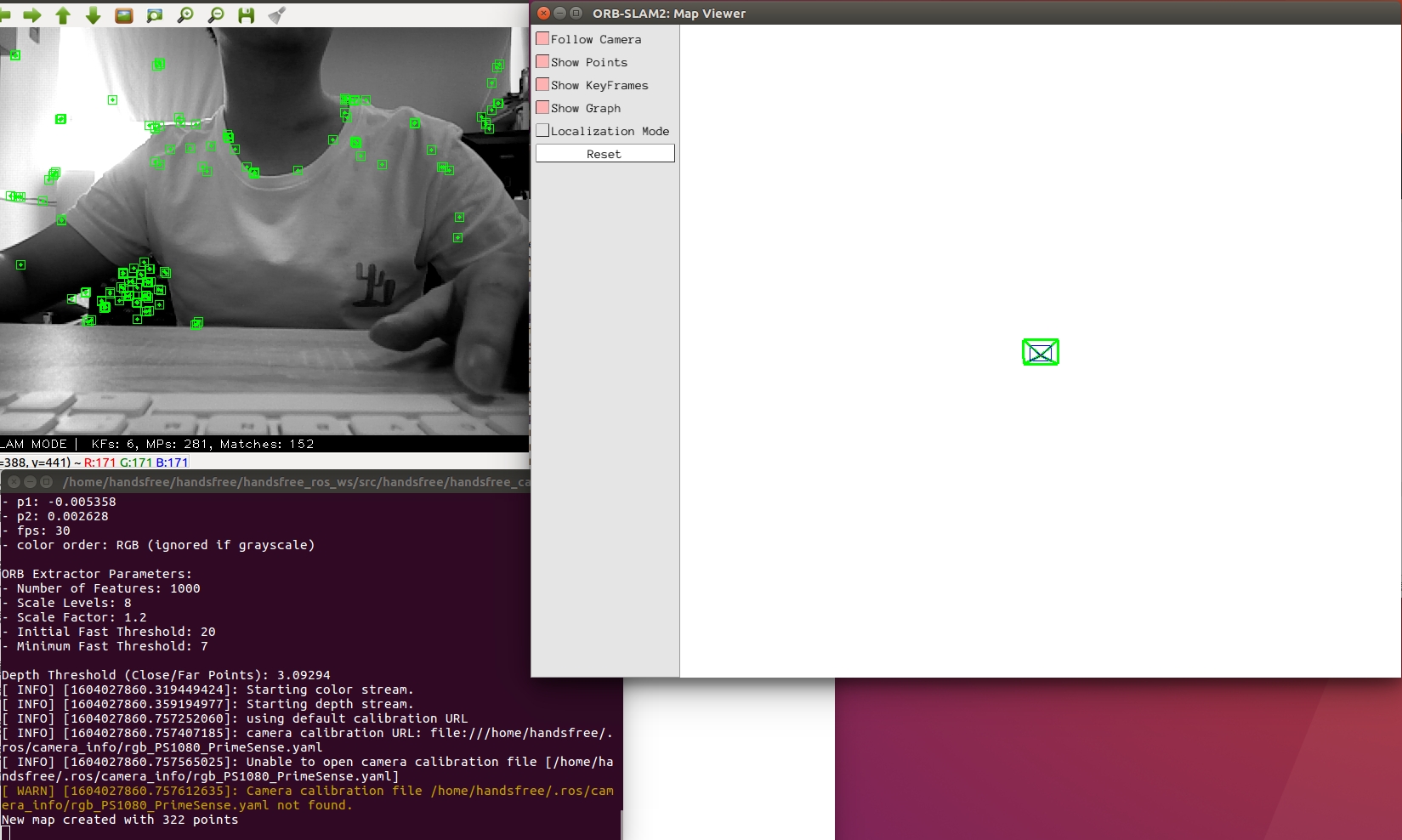

The video shows the results.

The video shows our handsfree ros robot completing the construction of the map via the camera.

This section describes how to port the open source project ORB_SLAM v2 to our handsfree robot.

Before porting, let's understand the relevant points.

About ORB_SLAM v2

Before we get to know ORB_SLAM v2, let's first understand ORB_SLAM

About ORB_SLAM

Introduction

ORB_SLAM is a 3D localization and mapping algorithm based on ORB features, published by Raul Mur-Artal, J. M. M. Montiel and Juan D. Tardos in 2015 in IEEE Transactions on Robotics. its core is the use of ORB (Orinted FAST and BRIEF) as the core feature in the whole visual SLAM (simultaneous localization and mapping). It is a complete SLAM system, including visual odometry, tracking, loopback detection, and is a monocular SLAM system based entirely on sparse feature points, with interfaces to monocular, binocular, and RGBD cameras.

ORB_SLAM basically continues the algorithm framework of PTAM, but most of the components in the framework have been improved, which are summarized in four main points:

ORB_SLAM uses ORB features, and the feature matching and relocation based on ORB descriptions have better view invariance than PTAM. In addition, the feature matching of new 3D points is more efficient, so the scene can be extended in a more timely manner. The timely expansion of the scene determines whether the subsequent frames can be tracked stably or not. 2.

- ORBSLAM incorporates a circular loop detection and closure mechanism to eliminate error accumulation. The system uses the same method as repositioning to detect loops (matching the common points on the key frames on both sides of the loop) and closes the loops by Pose Graph optimization. 3.

- PTAM requires the user to specify 2 frames to initialize the system, with sufficient common points and sufficient translation between the 2 frames. ORB_SLAM automatically selects the initialization 2 frames by detecting the parallax. 4.

- PTAM also requires new keyframes to provide sufficient parallax when expanding the scene, which makes it difficult to expand the scene. ORB_SLAM adopts a more robust keyframe and 3D point selection mechanism - first add new keyframes and 3D points as soon as possible with loose judgment conditions to ensure robust tracking of subsequent frames; then remove redundant keyframes and unstable 3D points with strict judgment conditions to ensure the efficiency and accuracy of BA. Then, redundant keyframes and unstable 3D points are removed with strict judgment conditions to ensure the efficiency and accuracy of BA.

Github source code of ORB_SLAM

About ORB_SLAM

Introduction

ORB_SLAM is a 3D localization and mapping algorithm based on ORB features, published by Raul Mur-Artal, J. M. M. Montiel and Juan D. Tardos in 2015 in IEEE Transactions on Robotics. its core is the use of ORB (Orinted FAST and BRIEF) as the core feature in the whole visual SLAM (simultaneous localization and mapping). It is a complete SLAM system, including visual odometry, tracking, loopback detection, and is a monocular SLAM system based entirely on sparse feature points, with interfaces to monocular, binocular, and RGBD cameras.

ORB_SLAM basically continues the algorithm framework of PTAM, but most of the components in the framework have been improved, which are summarized in four main points:

ORB_SLAM uses ORB features, and the feature matching and relocation based on ORB descriptions have better view invariance than PTAM. In addition, the feature matching of new 3D points is more efficient, so the scene can be extended in a more timely manner. The timely expansion of the scene determines whether the subsequent frames can be tracked stably or not. 2.

- ORBSLAM incorporates a circular loop detection and closure mechanism to eliminate error accumulation. The system uses the same method as repositioning to detect loops (matching the common points on the key frames on both sides of the loop) and closes the loops by Pose Graph optimization. 3.

- PTAM requires the user to specify 2 frames to initialize the system, with sufficient common points and sufficient translation between the 2 frames. ORB_SLAM automatically selects the initialization 2 frames by detecting the parallax. 4.

- PTAM also requires new keyframes to provide sufficient parallax when expanding the scene, which makes it difficult to expand the scene. ORB_SLAM adopts a more robust keyframe and 3D point selection mechanism - first add new keyframes and 3D points as soon as possible with loose judgment conditions to ensure robust tracking of subsequent frames; then remove redundant keyframes and unstable 3D points with strict judgment conditions to ensure the efficiency and accuracy of BA. Then, redundant keyframes and unstable 3D points are removed with strict judgment conditions to ensure the efficiency and accuracy of BA.

[Github source code of ORB_SLAM]) This section is divided into two main processes, closed-loop detection and closed-loop correction, respectively. The closed-loop detection is first detected using WOB, and then the similar transformation is calculated by Sim3 algorithm. The closed-loop correction is mainly the closed-loop fusion and the graph optimization of Essential Graph.

Advantages

- a well constructed visual SLAM system with excellent code construction, ideal for porting to real projects.

- g2o is used as a back-end optimization tool, which can effectively reduce the estimation errors of feature point positions and their own poses.

- using DBOW reduces the computational effort of finding features, while the loopback matching and repositioning are better. Repositioning: For example, when the robot encounters some unexpected situations after its data flow is suddenly interrupted, the robot can be repositioned in the map in a short time with the ORB_SLAM algorithm.

- uses a "survival of the fittest" like scheme for key frame culling to improve the robustness of system tracking and sustainable operation of the system.

- detailed experimental results of the most famous public datasets (KITTI and TUM datasets) are provided to show their performance.

- can use open source code and also supports the use of ROS.

Disadvantages of ####

- The constructed maps are sparse point cloud maps. Only a portion of the feature points in the image are kept as key points, fixed in space for localization, making it difficult to depict the presence of obstacles in the map.

- It is better to keep low speed motion when initializing and align objects with rich features and geometric textures.

- It is easier to lose frames when rotating, especially for pure rotation, which is sensitive to noise and does not have scale invariance.

- If pure visual SLAM is used for robot navigation, it may be less accurate or produce cumulative errors, drift, although DBoW bag of words can be used for loopback detection. It is better to use VSLAM + IMU for fusion, which can improve the accuracy up and is suitable for robot navigation in practical applications.

About ORB_SLAM v2

ORB-SLAM2 is an optimized modification of ORB-SLAM, which gets rid of the dependency on ROS, uses Pangolin for display module instead, and provides direct interface to binocular and RGBD, so that there is a complete SLAM solution for both monocular, binocular and RGB-D cameras. It enables map reuse, loopback detection and repositioning functions. ORB_SLAM2 is able to work in real time on a standard CPU, whether it is a small handheld device indoors, a UAV to a factory environment or a car driving in a city. ORB_SLAM2 uses a monocular and binocular beam-based leveling optimization (BA) approach on the back-end, a method that allows the evaluation of trajectory accuracy in metric scales. In addition, ORB_SLAM2 includes a lightweight localization mode that can track unmapped areas and match feature points using visual odometry while allowing for zero-point drift.

ORB_SLAM v2 More Related Introduction

Github source code for ORB_SLAM v2

Related datasets

About ORB

ORB (Oriented FAST and Rotated BRIEF) is an algorithm for fast feature point extraction and description.

ORB algorithm is divided into two parts, which are feature point extraction and feature point description. The feature extraction is developed from the FAST (Features from Accelerated Segment Test) algorithm, and the feature point description is improved from the BRIEF (Binary Robust IndependentElementary Features) feature description algorithm. The most important feature of the ORB algorithm is its fast computational speed. This is firstly due to the use of FAST to detect feature points, which is notoriously fast as its name implies. Again, the descriptors are computed using the BRIEF algorithm, and the unique binary string representation of the descriptors not only saves storage space, but also greatly reduces the matching time.

About SLAM

SLAM stands for Simultaneous Localization And Mapping and was first proposed by Hugh Durrant-Whyte and John J. Leonard. SLAM is mainly used to solve the problem of localization navigation and map construction for mobile robots operating in unknown environments. Simply put, the robot starts moving from an unknown position in an unknown environment, localizes itself based on position estimation and maps during the movement, and builds incremental maps based on its own localization to achieve autonomous localization and navigation of the robot.

SLAM usually consists of the following parts: feature extraction, data association, state estimation, state update, and feature update. SLAM can be used in both 2D and 3D motion applications.

Prepare to start

- Install the dependent software: OpenCV (computer vision library)

- It is recommended to configure our official Handsfree and ROS development environment, you can ignore installing OpenCV. official configuration link Here is the example of Xtion Pro, the depth camera of Xtion series.

Install ORB_SLAM v2

Install other dependencies

sudo apt-get install libglew-dev -y

sudo apt-get install libpython2.7-dev -y

sudo apt-get install ffmpeg libavcodec-dev libavutil-dev libavformat-dev libswscale-dev -y

sudo apt-get install libdc1394-22-dev libraw1394-dev -y

sudo apt-get install libjpeg-dev libpng12-dev libtiff5-dev libopenexr-dev -y

Installing Pangolin

Pangolin is a lightweight OpenGL input/output and video display library that wraps OpenGL. It can be used for 3D visualization and 3D navigation of visual maps, can input various types of video, and can retain video and input data for debug purposes.

mkdir -p ~/handsfree/orbslam2_ros_ws/

cd ~/handsfree/orbslam2_ros_ws/

git clone https://gitee.com/HANDS-FREE/Pangolin.git

mkdir ~/handsfree/orbslam2_ros_ws/Pangolin/build

cd ~/handsfree/orbslam2_ros_ws/Pangolin/build

cmake ..

make

Installing Eigen3

Eigen is a high-level C ++ library that efficiently supports linear algebra, matrix and vector operations, numerical analysis and its related algorithms.

sudo apt-get install -y libeigen3-dev

When ORB_SLAM2 is installed, DBoW2 and g2o are included inside

With the DBoW2 library, an image can be easily transformed into a low-dimensional vector representation that performs well for SLAM loopback detection (in particular, ORB_SLAM2).

g2o is a general graph optimization framework.

cd ~/handsfree/orbslam2_ros_ws/

git clone https://gitee.com/HANDS-FREE/ORB_SLAM2.git

cd ~/handsfree/orbslam2_ros_ws/ORB_SLAM2

chmod +x build.sh

./build.sh

Modify the .bashrc file

echo "export ROS_PACKAGE_PATH=\${ROS_PACKAGE_PATH}:~/handsfree/orbslam2_ros_ws/ORB_SLAM2/Examples/ROS/" >> ~/.bashrc

source ~/.bashrc

Compiling ROS packages

cd ~/handsfree/orbslam2_ros_ws/ORB_SLAM2

./build_ros.sh

Run ORB_SLAM

- Open a terminal and enter the command

roslaunch orb_slam2 orb_slam2.launch

For ORB-SLAM2: Map Viewer interface.

- Mouse slide the wheel to zoom in and out

- Mouse press the left button to drag and move

- Right mouse button to drag and rotate

Now we can open the keyboard control node of the robot and move the robot to start building the map.